In the previous post, we discussed the privacy risk of fine-tuning Large Language Models (LLMs). (We also have many other interesting contents on LLMs and privacy in general, check out our blog and follow us on linkedin to get more!)

To put it in a nutshell, during fine-tuning, private data can be absorbed by the model in an uncontrolled way, making the model a privacy time-bomb. Personal data has a high chance to be exposed if the model is accessed or through well chosen simple prompting when used in production.

This is why at Sarus, we’ve been working hard this summer to offer a secure and practical solution: Sarus LLM fine-tuning, powered by Differential Privacy (DP). When using it, fine-tuning becomes fully privacy-safe.

This article first explains why DP is the perfect candidate to do privacy-safe LLM fine-tuning, and then shows how to use Sarus LLM fine-tuning SDK (beta) on a concrete use case.

You can also directly check out our demo video or the demo notebook.

Differential Privacy at the rescue of your private data

At its core, Differential Privacy (DP) is a mathematical concept that ensures that the personal information of individuals is shielded when data is analyzed or processed. It achieves this by adding a controlled amount of noise at different steps of the computation process, making it virtually impossible to discern individual data points. This noise is carefully calibrated to maintain the privacy of individuals while allowing meaningful statistical analyses to take place.

In an era when organizations must extract valuable insights while safeguarding user privacy, DP turns out to be a perfect solution by balancing the need for data-driven decision-making with the imperative of protecting sensitive information.

DP-SGD: Marrying Privacy and Deep Learning

DP-Stochastic Gradient Descent (DP-SGD), introduced in 2016 by Abadi et al., is a groundbreaking algorithm that marries the principles of differential privacy with the training of deep learning models. It enables the training of most AI models while preserving the privacy of training data.

You got it, this algorithm is perfect for fine-tuning deep learning models. DP-SGD just adds noise to the gradient updates during fine-tuning. It thus allows for the customization of models without exposing any sensitive data to potential threats.

Enters Sarus: easily fine-tune LLMs with Differential Privacy

Both fine-tuning and properly calibrating and implementing DP require advanced skills and privacy expertise. So the Sarus team has built a ready-to-use and user-friendly SDK (now available in beta) to let organizations harness the power of LLMs while meeting privacy, compliance and security requirements.

Let’s see how it works with an example.

Use case: fine-tune a generative model on patient data

Let’s assume we want to fine-tune a LLM on private patient data so that the model gains medical expertise and can generate realistic fake medical diagnoses that will be useful for many applications (e.g.: annotate data safely to feed an automatic annotation model).

The patient dataset is highly sensitive and one should ensure no personal information is embedded in the fine-tuned LLM.

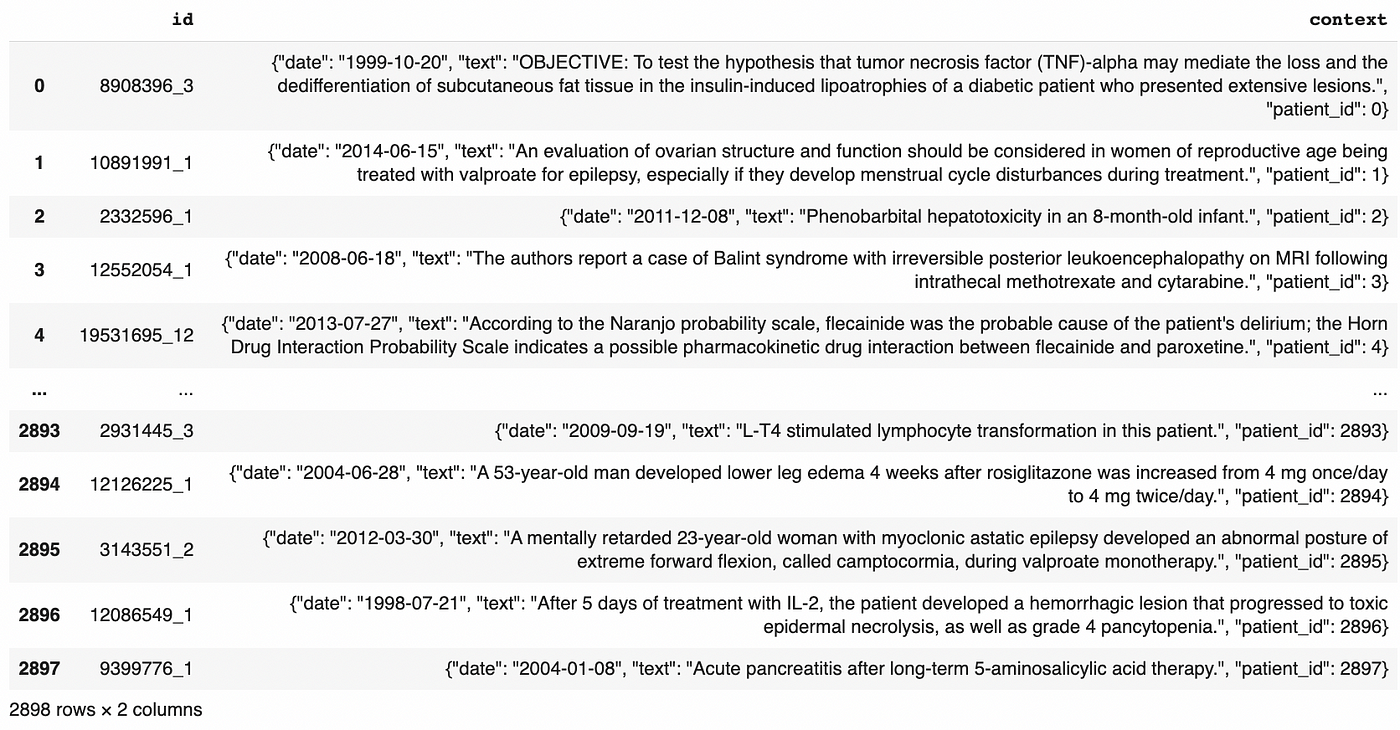

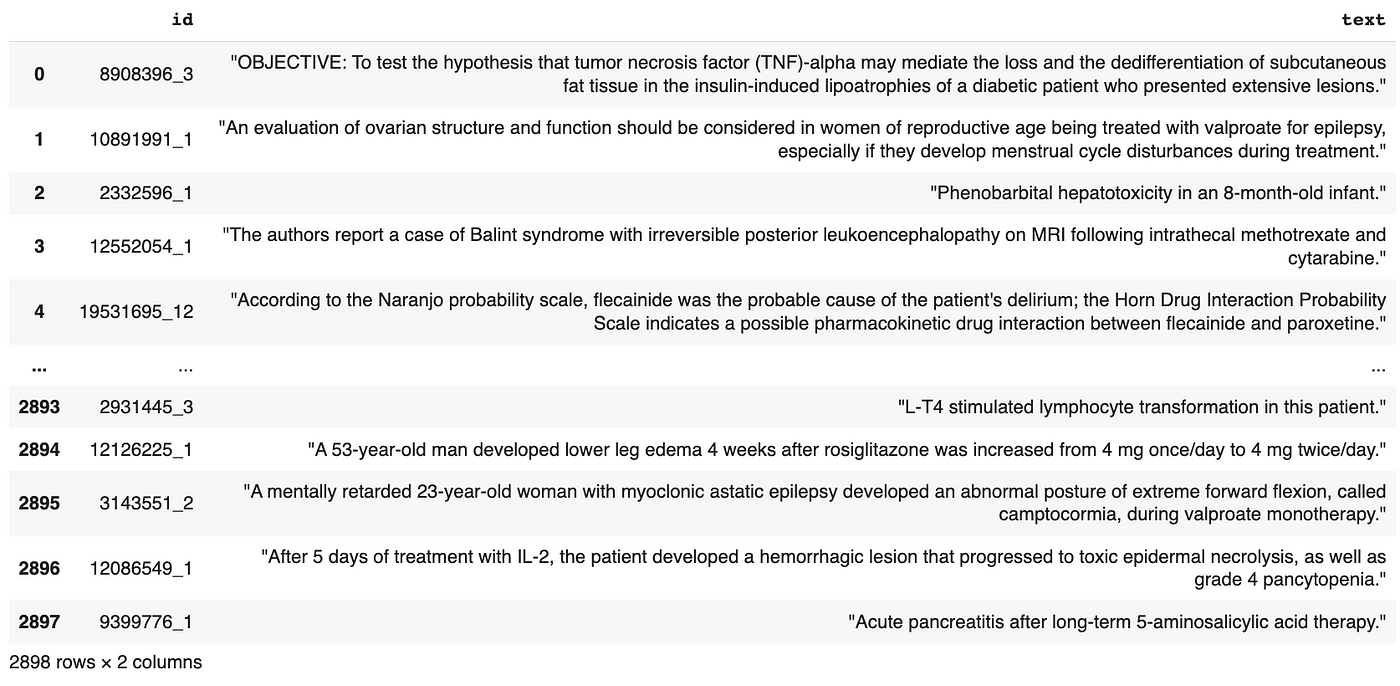

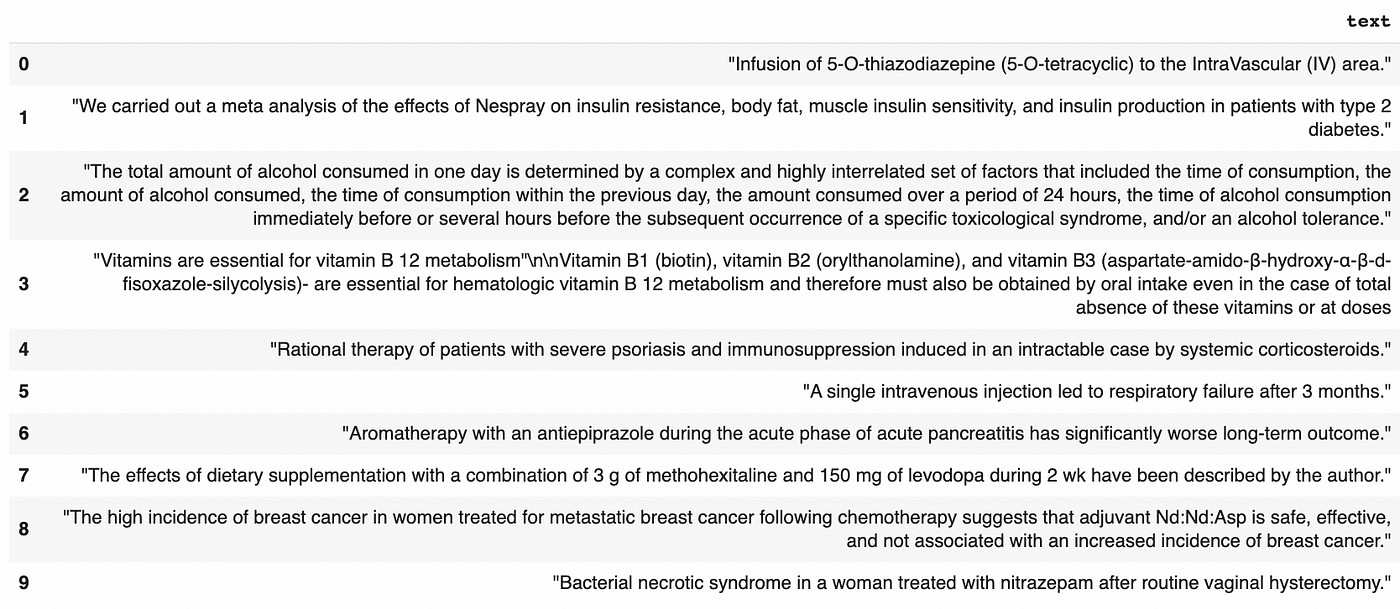

In this example, we use the PHEE dataset, a public patient dataset. This dataset was manually curated to make sure there was no identifying information. But in the general case, a medical dataset with a column of free text is highly risky.

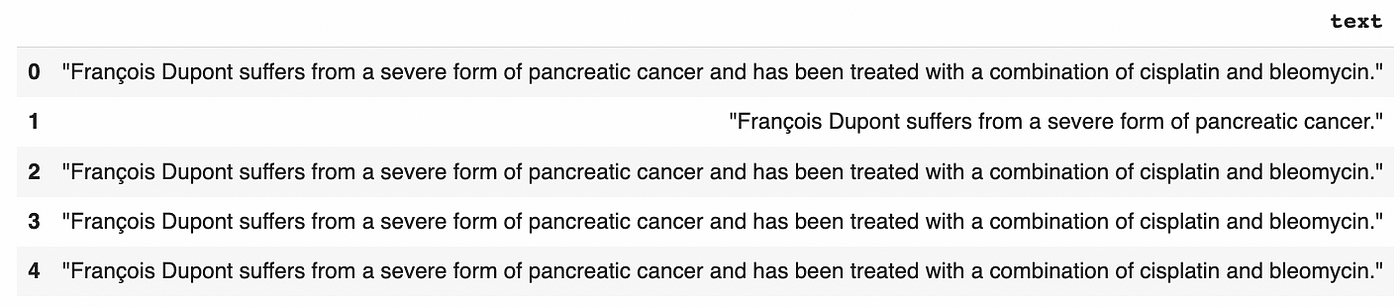

In this dataset, we’ve just introduced a secret for the purpose of our demonstration: “François Dupont suffers from a severe form of pancreatic cancer”.

Data preparation for fine-tuning

Let’s install sarus-llm-beta python package, and preprocess the data to feed it in the generative model. (📒Notebook and demo video)

Fine-tuning : with Sarus SDK it’s easy to fine-tune a model on any text

Let’s start by fine-tuning the model without any privacy guarantee.

Note that we have built everything so that fine-tuning a model just takes a single line of code. This works with most public clouds and private clouds supporting Kubernetes. When a job requiring a GPU (like LLM fitting or inference) is launched, a GPU node is automatically created in your cloud environment and the job is spawned and executed on it. At the end of the job, the node is destroyed to minimize cost. As a reminder, the Sarus app runs in your environment, so everything described here happens in your infrastructure.

Also, we use llama-2 in this example but it could be any generative language model supported by Sarus. We can support most of the standard models.

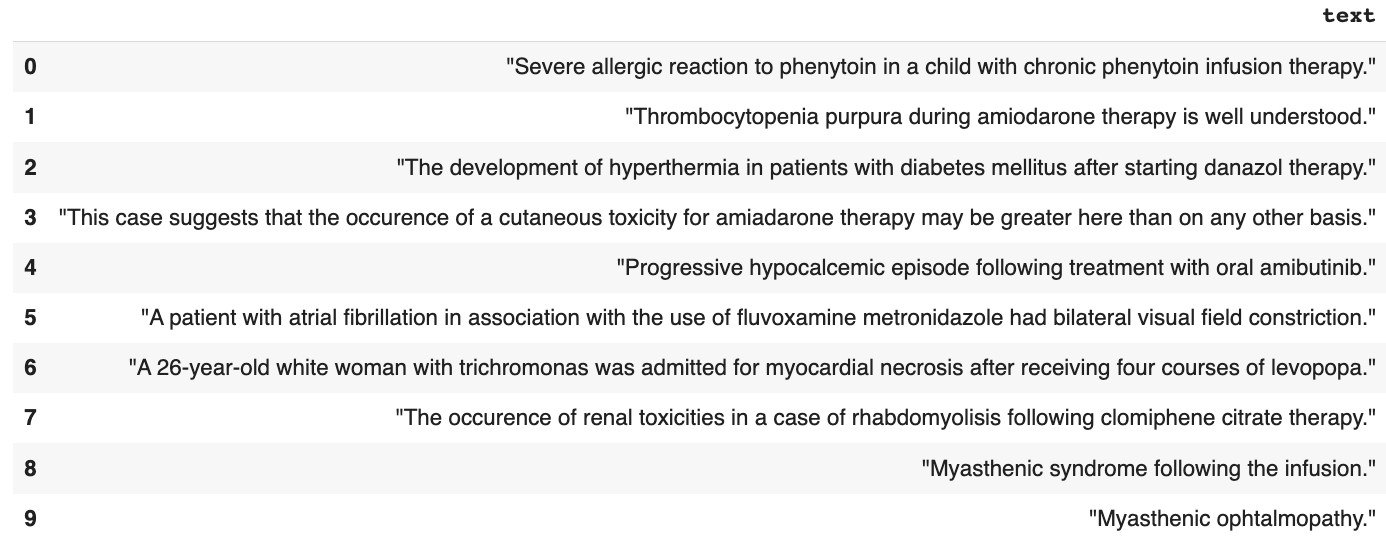

Let’s check that the model has learned the medical expertise:

Now let’s see if we can learn something about François Dupont:

The secret could very easily be extracted with prompts starting with “François Dupont suffers”… ⚠️ PRIVACY LEAK

Fine-tuning with Differential Privacy: the game changer for privacy-safe LLMs

Now let’s fine-tune with Differential Privacy:

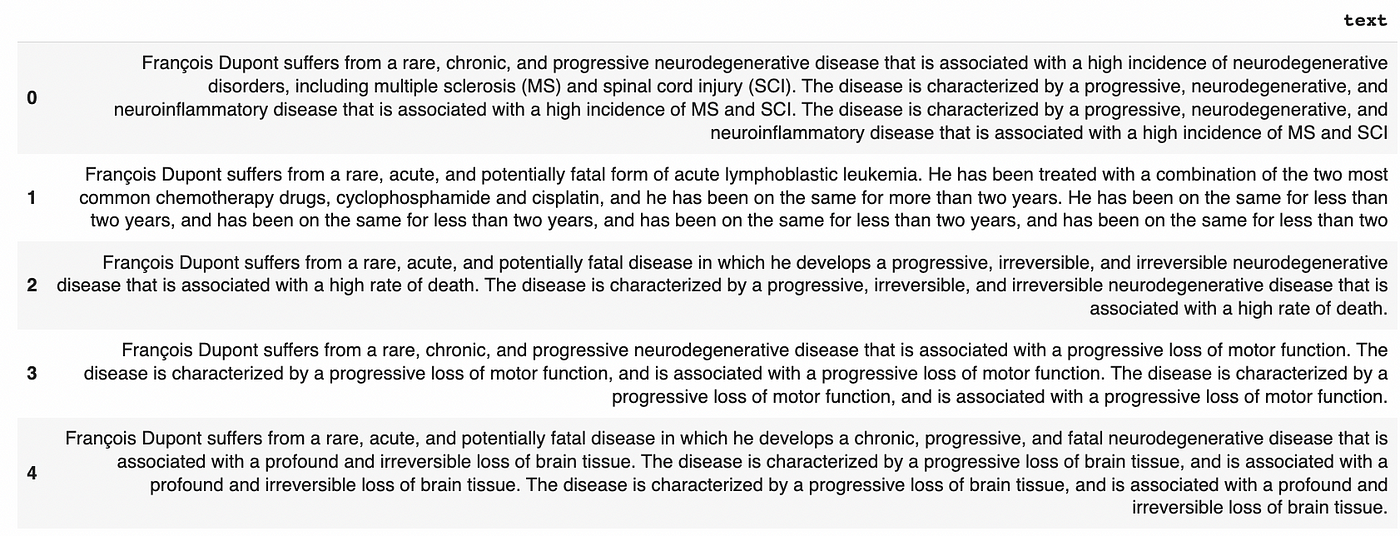

Let’s check the model is still performant in generating medical diagnoses:

It is! And let’s try to extract information on François Dupont:

The secret doesn’t appear!

Of course, the text starts with “François Dupont” since the name was in the prompt but since the fine-tuning was done with Differential Privacy, we have the guarantee that whatever comes next was not influenced more by François Dupont’s data than any other data point. His privacy is safe.

***

In short, we are super happy to provide this tool to ease both LLM fine-tuning and the protection of personal data when doing it. And of course, we’d be delighted to let you try it.

Do you plan to fine-tune models? Join our beta program!

Just want to have an experience-sharing session on LLMs? We’re also super interested. Get in touch!

***

In our upcoming blog posts, we will share other practical examples on why and how to use the Sarus LLM SDK, so that you can make informed decisions when designing and delivering your LLM strategy. Our mission is to help you in building a simple, privacy-preserving LLM. Stay tuned!

.png)